Introduction

Artificial intelligence (AI) is transforming industries at an unprecedented pace. From healthcare and finance to education and transportation, AI-powered systems are driving efficiency, innovation, and insight. Yet, as AI becomes more integrated into our lives, it raises pressing ethical questions. How can we balance the drive for innovation with the responsibility to ensure AI is safe, fair, and aligned with human values?

The Promise and Peril of AI

AI holds incredible potential to solve complex problems, automate mundane tasks, and augment human decision-making. In medicine, AI algorithms can detect diseases earlier than ever before. In finance, they streamline risk assessment and fraud detection. And in environmental management, AI models can predict climate patterns and optimize resource allocation.

However, AI’s power comes with risks. Biases embedded in datasets can reinforce discrimination. Automated decision-making can lack transparency, leaving individuals unable to understand or challenge outcomes. Moreover, as AI systems gain autonomy, questions of accountability become critical—who is responsible when an AI makes a mistake?

Key Ethical Considerations

Fairness and Bias

AI systems are only as unbiased as the data they’re trained on. Historical biases in hiring, lending, or law enforcement data can unintentionally be encoded into algorithms, perpetuating inequality. Organizations must actively audit datasets, implement fairness metrics, and design AI to minimize discriminatory outcomes.

Transparency and Explainability

AI decision-making can be opaque, especially with complex models like deep neural networks. Ethical AI requires that systems are explainable, providing users with understandable reasons for decisions. Transparency not only builds trust but also empowers individuals to challenge unfair or harmful outcomes.

Privacy and Data Protection

AI thrives on data, but with great data comes great responsibility. Collecting, storing, and analyzing personal information raises privacy concerns. Ethical AI practices include data minimization, anonymization, and compliance with global privacy regulations such as GDPR or CCPA.

Accountability and Governance

As AI systems take on more autonomous functions, defining accountability becomes crucial. Clear governance structures must be established, ensuring that humans remain responsible for critical decisions. Companies and governments must set policies to manage risks and assign liability in the event of errors.

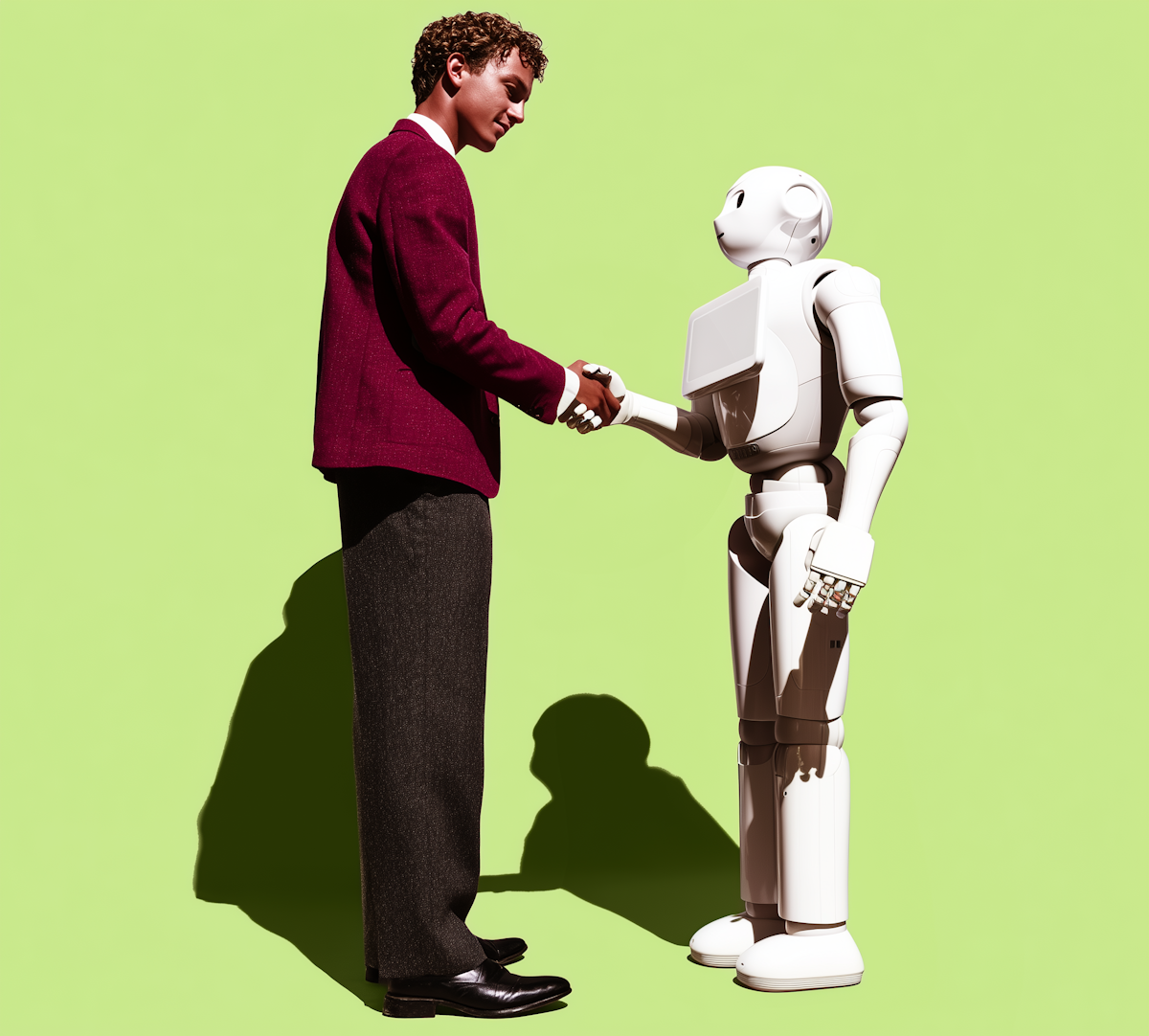

Human-Centric AI

Ethical AI prioritizes human well-being over technological capability. It encourages collaboration between humans and machines rather than replacement. Designers should ensure AI enhances human skills, respects autonomy, and promotes equitable access to its benefits.

Striking the Balance

Balancing innovation and responsibility in AI is not about halting progress—it’s about steering it wisely. Ethical frameworks, regulatory standards, and interdisciplinary collaboration are key. Organizations must embed ethics into AI development from day one, not as an afterthought.

Moreover, public engagement is essential. Society should have a voice in shaping the ways AI impacts daily life, from workplace automation to healthcare and public safety. By integrating ethics, transparency, and inclusivity into AI design, we can harness its potential while mitigating harm.

Final Thoughts

Artificial intelligence offers transformative opportunities, but its ethical implications cannot be ignored. Developers, policymakers, and society must work together to ensure AI innovations are responsible, transparent, and aligned with human values. Striking the right balance between innovation and responsibility is the challenge of our time—and meeting it will define the future of AI.

Full Name

22 January